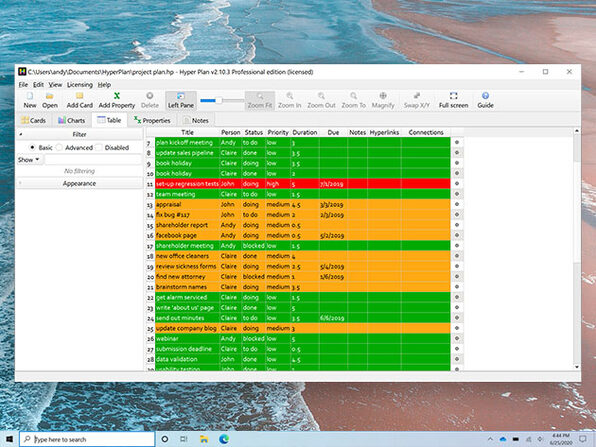

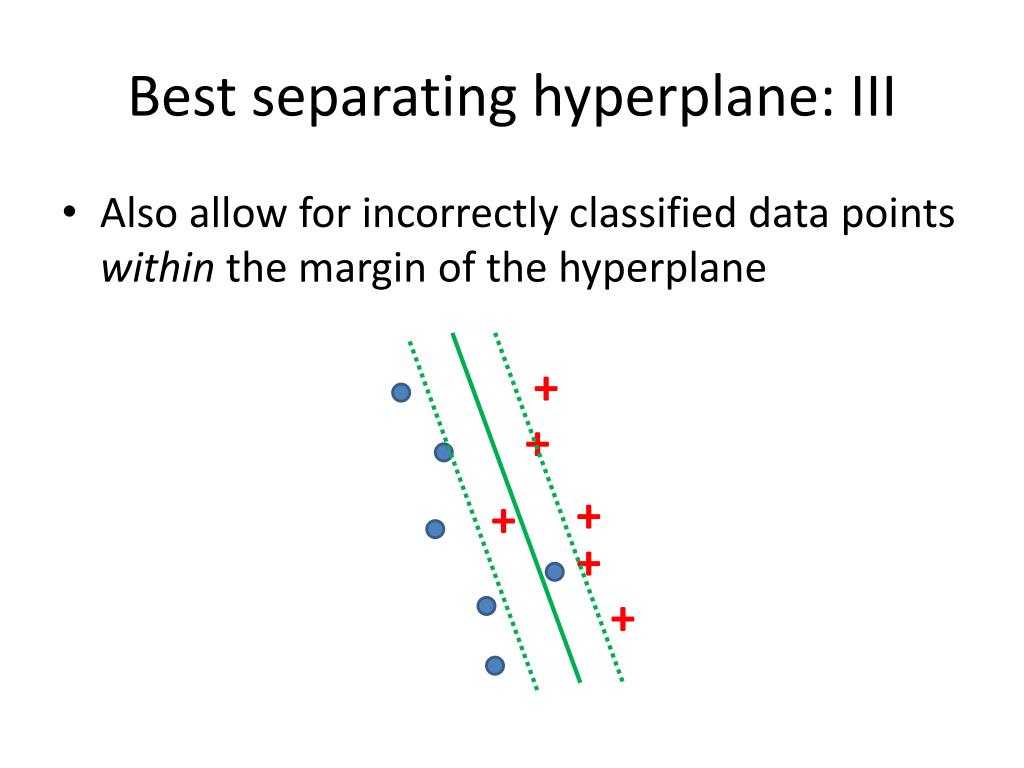

Marker=dict(color=y, size=2.5, line=dict(color="black", width=1))), Then, using plotly for visualization: go.Figure(, y=X, z=X, mode="markers", showlegend=False, Zz = -(w/w)*xx -(w/w)*yy - model.intercept_/w Gemballa porsche 996 turbo, Svm hyperplane example, Naomi garcia bastida, Demonata lord loss movie, Kosciusko remc login. To express the hyperplane as a function of the z coordinate (the third feature) create a mesh grid over the x and y coordinates (the first two features): w = ef_ The hyperplane is defined as ax+by+cz+d=0 where x,y,z are the three features, a,b,c the fitted coefficients of the model and d the fitted intercept. So suppose you fitted an SVM classifier as follows, where X is an array with 3 columns (features) and y has the same length as X and represents the classes: model = SVC(kernel="linear").fit(X, y) Any1 knows some smart codes for my purpose? Gaussian Kernel: It is the most used SVM Kernel for usually used for non-linear data. Polynomial Kernel: It is a simple non-linear transformation of data with a polynomial degree added. 1-norm SVM gives the lowest test accuracy on synthetic datasets but selects more features. Linear Kernel: It is just the dot product of all the features. Finally, we implement this three algorithms and compare their per-formances on synthetic and microarray datasets. How can I achieve a hyperlane in the other 3 graphs? (see picture) SVM-Recursive Feature algorithm and 1-norm SVM, and propose a third hybrid 1-norm RFE.

I want to visualize a stylzed hyperplanes for the 4 graphs. See the confusion matrix result of prediction, using command table to compare the result of SVM prediction and the class data in y variable.I want to create a stylized graph to explain SVM classifier.Ĭode and Graph: import aph_objs as goįrom plotly.subplots import make_subplotsĬolumns = ĭf = pd.DataFrame(]])įig.add_trace(go.Scatter(x=x1, y=y1, mode='markers'), row=1, col=1)įig.add_trace(go.Scatter(x=x2, y=y2, mode='markers'), row=1, col=1)įig.add_trace(go.Scatter(x=, y=, mode='lines'), row=1, col=1)įig.add_trace(go.Scatter3d(x=x1, y=y1,z=z1, mode='markers'), row=1, col=2)įig.add_trace(go.Scatter3d(x=x2, y=y2,z=z2, mode='markers'), row=1, col=2)įig.add_trace(go.Scatter3d(x=, y=, z=100*, mode='lines'), row=1, col=2)įig.add_trace(go.Scatter3d(x=x1, y=y1,z=z1, mode='markers'), row=2, col=1)įig.add_trace(go.Scatter3d(x=x2, y=y2,z=z2, mode='markers'), row=2, col=1)įig.add_trace(go.Scatter3d(x=x1, y=y1,z=z1, mode='markers'), row=2, col=2)įig.update_layout(height=700, showlegend=False) System.time(predict(svm_model_after_tune,x)) Run Prediction again with new model pred <- predict(svm_model_after_tune,x) The hyperplane is defined as ax+by+cz+d0 where x,y,z are the three features, a,b,c the fitted coefficients of the model and d the fitted intercept. , data = iris, kernel = "radial", cost = 1, In this project, we will explore various machine learning techniques for recognizing handwriting digits. , data=iris, kernel="radial", cost=1, gamma=0.5) Background: Handwriting recognition is a well-studied subject in computer vision and has found wide applications in our daily life (such as USPS mail sorting). It can handle both classification and regression on linear and non-linear data. This is one of the reasons we use SVMs in machine learning. # - sampling method: 10-fold cross validationĪfter you find the best cost and gamma, you can create svm model again and try to run again svm_model_after_tune <- svm(Species ~. SVMs are used in applications like handwriting recognition, intrusion detection, face detection, email classification, gene classification, and in web pages. svm_tune <- tune(svm, train.x=x, train.y=y, Overcome the linearity constraints: Map to non-linearly to higher dimension. TUning SVM to find the best cost and gamma. Support Vector Machine (SVM) Based on Nello Cristianini presentation Basic Idea Use Linear Learning Machine (LLM). See the confusion matrix result of prediction, using command table to compare the result of SVM prediction and the class data in y variable. System.time(pred <- predict(svm_model1,x)) This blog will go further into how the SVM predicts/classifies the non-linearly separable cases by leveraging soft margin and kernel Tricks/ Open in app. Run Prediction and you can measuring the execution time in R pred <- predict(svm_model1,x) , data = iris)Ĭreate SVM Model and show summary svm_model1 <- svm(x,y) # Sepal.Length Sepal.Width Petal.Length Petal.Width Speciesĭivide Iris data to x (containt the all features) and y only the classes x <- subset(iris, select=-Species)Ĭreate SVM Model and show summary svm_model <- svm(Species ~.

SVM E0171 HYPERPLAN INSTALL

Use library e1071, you can install it using install.packages(“e1071”). SVM example with Iris Data in R SVM example with Iris Data in R

0 kommentar(er)

0 kommentar(er)